Welcome to Optimization

Optimization allows for prompt optimization via a set of composable losses, exploration strategies, moves, filters, and states.

For source code, please visit the GitHub repo.

Current Features

- Token add / swap / delete / insert / gradient moves

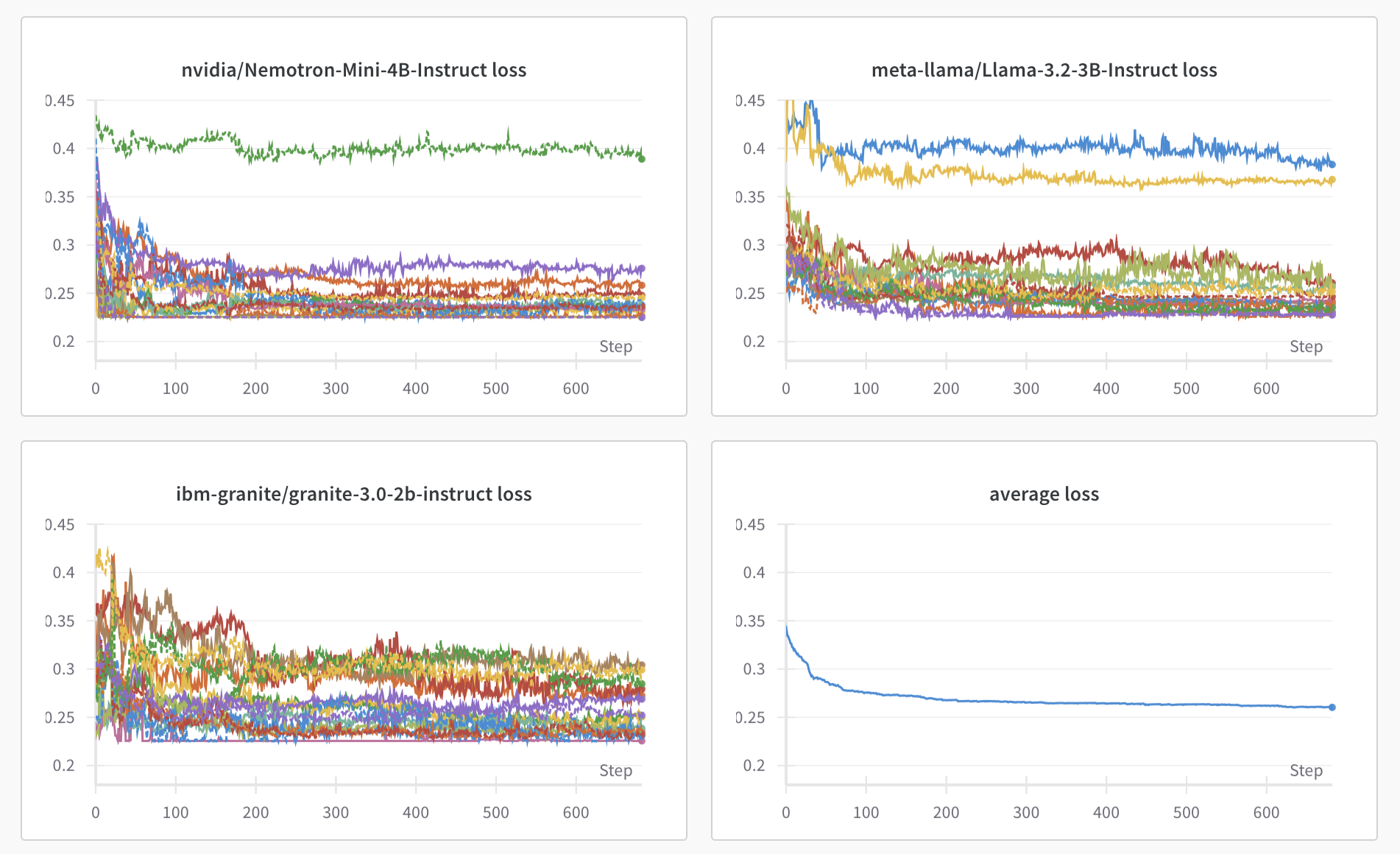

- Universal optimization (multi model and multi behavior)

- Token forcing, logit distribution matching, perplexity, logprobs loss

- Multi GPU parallelization

- and more...

Choose and swap optimizers, losses, moves, and filters in seconds.

Adding new losses and moves is made easy, letting you experiment with algorithmic changes without having to mess with confusing tokenization, chat formatting, GPU allocation, and other issues.

Best of all, optimization is built to support large scale optimization and quick performance, so you can scale up to many GPUs, many objectives, and many models! Want to optimize against 20 models simutaneously using 8 GPUs? Optimization will let you do that.

And, you can track your optimization runs on WandB.

For more about Haize Labs, find us at haizelabs.com.